I have two machines which are my original two CentOS 7 installs, which date back several years. At that time, I was running an old (now ancient) version of cobbler which had a history of blowing up when I tried updating to newer versions (more on that in a different, future post), and there was no support for RHEL/CentOS 7 installs using it. And I don’t mean that it was just missing the “signatures” and what defines an OS version to cobbler… the network boot just went ***BOOM*** as it was bringing up the installer. And having a new-to-me Dell PE2950III which could actually do hardware virtualization, and impatiently wanting to get it up, along with a VM to start playing with… I kinda painted myself into a corner. But that is a long story… and is a good lesson as to why patience is good.

Now is probably a good point to plan for Murphy, than to suffer a visit by the Imp of the Perverse… Actions such as verified backups, VM snapshots, or VM clones are ways to practice safe hacking…

One of the issues I have been having with the VM, which I have used for my development on RHEL/CentOS 7, has been the size of the / and /var filesystems. To say that they were “painfully small” is like saying that the crawler’s at KSC will give you a painfully very flat foot… 8GB for the root filesystem, and only 4GB for /var… and I never bothered to look into details until today, figuring I would just replace this VM after I figured out what all I had done over 18+ months of quick admin hacks (install this, change this, upgrade this….) on an ad-hoc basis with no notes… FAR from my old norm, where outside of say the contents of /home or a few files under /etc, all I had to do was tell cobbler and the host… “Reinstall this machine”, and come back a couple of hours later to find everything including my local customizations, third party software such as the eclipse IDE, etc. all reinstalled. But this machine…it had become the unruly teenager…

Here is what df was telling me… **AFTER** I told yum to clean its cache numerous times the past week…

# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/builds-root 8378368 7623100 755268 91% /

devtmpfs 1923668 0 1923668 0% /dev

tmpfs 1940604 0 1940604 0% /dev/shm

tmpfs 1940604 25304 1915300 2% /run

tmpfs 1940604 0 1940604 0% /sys/fs/cgroup

/dev/sda1 1038336 227208 811128 22% /boot

/dev/mapper/builds-var 4184064 4028388 155676 97% /var

tmpfs 388124 8 388116 1% /run/user/0

Since I was about ready to update my development WordPress installation on this machine. And so… time to grab the rattan and go beat this machine into at least temporary submission (and kick myself repeatedly in the process). And so we begin…

Just how big did I create the virtual disk for this VM??

While in cobbler, I have scheduled this machine to be rebuilt with a 64GB virtual drive, I was wondering how big it was at the moment. And so, I do this:

[root@cyteen ~]# virsh vol-info --pool default wing-1-sda.qcow2

Name: wing-1-sda.qcow2

Type: file

Capacity: 64.00 GiB

Allocation: 24.51 GiB

[root@cyteen ~]# ssh wing-1 pvdisplay /dev/sda2

--- Physical volume ---

PV Name /dev/sda2

VG Name builds

PV Size <16.01 GiB / not usable 3.00 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 4097

Free PE 1

Allocated PE 4096

PV UUID LHhXlZ-jmk5-tYTN-Ql67-dwss-4GxB-wp9rj1

[root@cyteen ~]# ssh wing-1 vgdisplay

--- Volume group ---

VG Name builds

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 4

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 3

Open LV 3

Max PV 0

Cur PV 1

Act PV 1

VG Size 16.00 GiB

PE Size 4.00 MiB

Total PE 4097

Alloc PE / Size 4096 / 16.00 GiB

Free PE / Size 1 / 4.00 MiB

VG UUID xb9wg7-Tg8D-WV91-blt6-QCSK-2FyL-NMH5tp

[root@cyteen ~]# ssh wing-1 sfdisk -s /dev/sda

67108864

[root@cyteen ~]# ssh wing-1 sfdisk -l /dev/sda

Disk /dev/sda: 8354 cylinders, 255 heads, 63 sectors/track

Units: cylinders of 8225280 bytes, blocks of 1024 bytes, counting from 0

Device Boot Start End #cyls #blocks Id System

/dev/sda1 * 0+ 130- 131- 1048576 83 Linux

/dev/sda2 130+ 2220- 2090- 16784384 8e Linux LVM

/dev/sda3 0 - 0 0 0 Empty

/dev/sda4 0 - 0 0 0 Empty

OK… 64GB, only 24GB of which are allocated… “What is go on? Stupid Live CD!!!” sums up my reaction… politely.

You Only Live Twice, Mr. Bond…

At this point, frustrated… I proceeded to check a few things, then do an ad-hoc fix. In retrospect… I should have also taken advantage of a feature of virtual machines and done a snapshot, but… something to remember next time… And this is a good argument for using scripts, as well as tools like cobbler and Ansible, and back-patching your scripts as you think of things you could have done better. But as is the case with this sorts of things, you expand from the outside in, and so… first, the disk partition table. While I have used various incarnations of fdisk, sfdisk and other tools for the partition table which is a part of the boot sector, right now, I am more fond of parted outside of kickstart scripts (a bit on that in another post about blivet, hopefully in the near future), and so, I do the following:

[root@wing-1 var]# parted /dev/sda

GNU Parted 3.1

Using /dev/sda

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) p

Model: ATA QEMU HARDDISK (scsi)

Disk /dev/sda: 68.7GB

Sector size (logical/physical): 512B/512B

Partition Table: msdos

Disk Flags:

Number Start End Size Type File system Flags

1 1049kB 1075MB 1074MB primary xfs boot

2 1075MB 18.3GB 17.2GB primary lvm

(parted) unit s

(parted) p

Model: ATA QEMU HARDDISK (scsi)

Disk /dev/sda: 134217728s

Sector size (logical/physical): 512B/512B

Partition Table: msdos

Disk Flags:

Number Start End Size Type File system Flags

1 2048s 2099199s 2097152s primary xfs boot

2 2099200s 35667967s 33568768s primary lvm

(parted) resizepart 2 -1

(parted) p

Model: ATA QEMU HARDDISK (scsi)

Disk /dev/sda: 134217728s

Sector size (logical/physical): 512B/512B

Partition Table: msdos

Disk Flags:

Number Start End Size Type File system Flags

1 2048s 2099199s 2097152s primary xfs boot

2 2099200s 134217727s 132118528s primary lvm

(parted) q

Information: You may need to update /etc/fstab.

[root@wing-1 var]# partprobe

To sum up things, I use parted to print the old partition table, using both its “compact” units, and sectors, switching to the latter between the two commands. And then the resizepart 2 -1 says to resize partition 2 to end at the end of the disk (“-1”). Then I wrap things up with showing the partition table again and quitting parted. And lastly, the partprobe tells the kernel to reload the partition tables, just to be sure it has the latest information.

What’s next dedushka???

The next layer nested in this Matryoshka/Patryoshka (yes, there are male nested Russian dolls, which showed up during the Perestroika, and since I am a guy, and this post’s theme seems to be Bond…) doll is the LVM physical volume. For that, we have LVM to do the lifting for us. Here, we will follow the same pattern of show what we have, expand, reload and then show to verify.

[root@wing-1 var]# pvdisplay

--- Physical volume ---

PV Name /dev/sda2

VG Name builds

PV Size <16.01 GiB / not usable 3.00 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 4097

Free PE 1

Allocated PE 4096

PV UUID LHhXlZ-jmk5-tYTN-Ql67-dwss-4GxB-wp9rj1

[root@wing-1 var]# pvresize /dev/sda2

Physical volume "/dev/sda2" changed

1 physical volume(s) resized / 0 physical volume(s) not resized

[root@wing-1 ~]# pvscan

PV /dev/sda2 VG builds lvm2 [<63.00 GiB / <27.00 GiB free]

Total: 1 [<63.00 GiB] / in use: 1 [<63.00 GiB] / in no VG: 0 [0 ]

[root@wing-1 var]# pvdisplay

--- Physical volume ---

PV Name /dev/sda2

VG Name builds

PV Size <63.00 GiB / not usable 2.00 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 16127

Free PE 12031

Allocated PE 4096

PV UUID LHhXlZ-jmk5-tYTN-Ql67-dwss-4GxB-wp9rj1

As you can see, the pvresize with just the partition says to expand it to the size of the disk partition, though we could have also expanded it only part of the way. And now, opening up to see the next layer, we have the volume group, which we need only check, and do not need to do a vgextend, as we would have had we created another partition at the disk partition table level.

[root@wing-1 var]# vgdisplay builds

--- Volume group ---

VG Name builds

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 5

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 3

Open LV 3

Max PV 0

Cur PV 1

Act PV 1

VG Size <63.00 GiB

PE Size 4.00 MiB

Total PE 16127

Alloc PE / Size 4096 / 16.00 GiB

Free PE / Size 12031 / <47.00 GiB

VG UUID xb9wg7-Tg8D-WV91-blt6-QCSK-2FyL-NMH5tp

The disk is now enough…

Enough to give me some breathing room that is, and finish back engineering all my ad-hoc changes on this VM to allow it to die on another day. I can now expand /var to a more comfortable 16GB, quadruple what I started with. To do that, LVM has given us lvresize, and the filesystem has given us its own tool. And I am going to cover both of these together, and also address the growing scarcity of space on / as well. Think of it as an odd nesting doll, where at some point, you find two smaller dolls back to back (kinda like some onions do).

Were I using ext3 or ext4, things would be somewhere between somewhat more difficult to a pain in the six. This is because those filesystems either do not support what one might call “hot-growth”, where you can grow the filesystem without remounting it, or may only do so in limited cases… (honestly, after working with ext3, I followed the same path when it came to ext4, even though it may have worked with the filesystem mounted. But more often these days, I am using xfs… and it allows me to pull off a trick shot.

[root@wing-1 html]# lvresize -L 16g /dev/builds/var

Size of logical volume builds/var changed from 4.00 GiB (1024 extents) to 16.00 GiB (4096 extents).

Logical volume builds/var successfully resized.

[root@wing-1 html]# lvdisplay

--- Logical volume ---

LV Path /dev/builds/var

LV Name var

VG Name builds

LV UUID sBnS4b-Bgrq-sIb0-f8Rz-67ey-UfR3-2FlUFv

LV Write Access read/write

LV Creation host, time planys-test.home.ka8zrt.com, 2016-09-16 00:12:59 -0400

LV Status available

# open 1

LV Size 16.00 GiB

Current LE 4096

Segments 2

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

[root@wing-1 html]# xfs_info /var

meta-data=/dev/mapper/builds-var isize=512 agcount=4, agsize=262144 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0 spinodes=0

data = bsize=4096 blocks=1048576, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@wing-1 html]# xfs_growfs /var

meta-data=/dev/mapper/builds-var isize=512 agcount=4, agsize=262144 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0 spinodes=0

data = bsize=4096 blocks=1048576, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

data blocks changed from 1048576 to 4194304

[root@wing-1 html]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/builds-root 8378368 7623060 755308 91% /

devtmpfs 1923668 0 1923668 0% /dev

tmpfs 1940604 0 1940604 0% /dev/shm

tmpfs 1940604 25304 1915300 2% /run

tmpfs 1940604 0 1940604 0% /sys/fs/cgroup

/dev/sda1 1038336 227208 811128 22% /boot

/dev/mapper/builds-var 16766976 2487280 14279696 15% /var

tmpfs 388124 8 388116 1% /run/user/0

The real magic is that xfs allowed me to to the exact same thing with /, without the need to boot from alternate media so that I do not have the logical volume and filesystem active. The only issue is, where I know the process to shrink an ext3 filesystem to a smaller size (which is riskier than growing it), everything I have read to date for xfs says it is a backup, tear-down, replace and reload. But you know what… I will gladly give up the ability to shrink if I get the ability to grow / without the prior hassles. Especially when it gives me this:

[root@wing-1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/builds-root 16766976 7623284 9143692 46% /

devtmpfs 1923660 0 1923660 0% /dev

tmpfs 1940600 0 1940600 0% /dev/shm

tmpfs 1940600 8932 1931668 1% /run

tmpfs 1940600 0 1940600 0% /sys/fs/cgroup

/dev/sda1 1038336 227208 811128 22% /boot

/dev/mapper/builds-var 16766976 2640112 14126864 16% /var

tmpfs 388120 4 388116 1% /run/user/0

And now, for a Quantum of Solace…

While I still have lots of things to deal with in the future, at least I have a tiny bit of comfort in knowing I am not going to be banging my head on an undersized filesystem in doing so… I have enough to worry about without having to somehow having to limp along or otherwise suffer. And with luck, and a new $job, maybe I can take a few hours to hack a few custom buttons (or a dropdown for them) into the new WordPress editor, such as for marking up commands, filenames, and the like, without having to constantly shift over to editing the blocks as HTML to put in what is normally simple, inline markup.

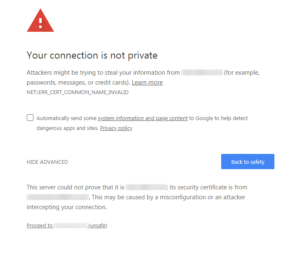

Notice… this has the “Proceed to…” link at the bottom, which the other screen I got when using the FQDN did not. But going this route, I was able to both re-enable the ability to use HTTP as well as HTTPS, turn off forced redirection by the app, and thanks to some digging, find out how to change these two settings from the CLI. And so, in case browsers across the board decide to do away with the “Proceed to” link in all cases, I am putting the info about changing the settings here for general consumption.

Notice… this has the “Proceed to…” link at the bottom, which the other screen I got when using the FQDN did not. But going this route, I was able to both re-enable the ability to use HTTP as well as HTTPS, turn off forced redirection by the app, and thanks to some digging, find out how to change these two settings from the CLI. And so, in case browsers across the board decide to do away with the “Proceed to” link in all cases, I am putting the info about changing the settings here for general consumption.